Introduction

They consist of interconnected layers of nodes, or “neurons,” that process and transmit information. These networks can learn from vast amounts of data, making them powerful tools for tasks such as image and speech recognition, natural language processing, and predictive analytics.

Where did this idea come from?

Neural networks, tools for machine learning, are inspired by the structure and functionality of the human brain.

If we look at our brain or nervous system under a microscope, we will find that it contains enormous numbers of nerve cells called neurons and those nerve cells are connected in vast networks. We don’t have precise figures, but in a human brain, the estimate is something like 85 to 87 billion neurons in the human brain. These cells are connected in enormous networks. One neuron could be connected to up to 8000 other neurons. Each of those neurons is doing a tiny, very simple pattern recognition task. And when it sees that pattern, it sends a signal to all the other neurons that it’s connected to.

So, these are neural networks in the human brain, but we don’t understand in detail these complex pattern recognition tasks.

Implementation of Neural Networks with software

We can implement this using data structures to represent the network and some software to manage it in a very sophisticated abstract manner. This idea goes back to the 1940s and researchers McCulloch and Pitts. They were stuck by the idea that the structure that we see in the brain looks a bit like electrical circuits. They thought, could we implement the human brain in electrical circuits, but they didn’t have the capabilities to do that. But the idea stuck. It began to seriously look at the idea of doing this in software in 1960. And then, there was another flutter of interest in 1980, but it was only this century that it became possible because of three reasons:

1 There were scientific advances in machine learning

2 availabilities of big data

3 availabilities of supercomputer power

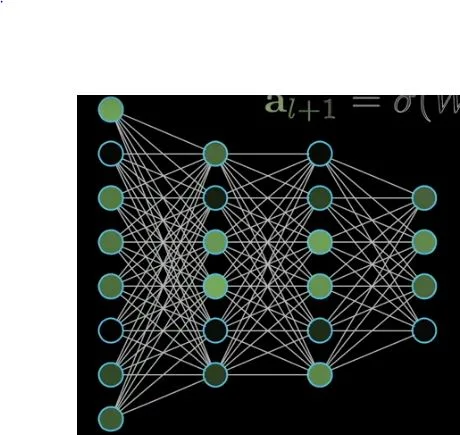

Representation of Neural Networks

So, artificial neural networks, which are an abstract representation of the human brain neural networks in a very simplified and reduced manner, are implemented using data structures to represent layers with their cells, connections between cells, and software to manage the network.

These networks are organized into layers of cells, stacked in parallel. They start with the first layer then the hidden layers and the last layer.

The data is received through the cells of the first layer and that’s why is called the input layer.

The input layer is followed by several other layers, called the hidden layers (which are between the input layer and the output layer). The cells of the first hidden layer process the outputs coming from the cells of the input layer and pass the result to the next hidden layer and so forth until the processes reach the last layer.

At the end of the network the last layer, called the output layer, receives and produces the final prediction.

The network has only one input layer and one output layer but may have many hidden layers. Their number and size vary according to the capacity and complexity of the network.

The cells in the input layer have only output connections, the cells in the hidden layers have both input and output connections, and the cells in the output layer have only input connections.

The cells or neurons are the basic units of processing in the network. Each neuron takes input processes it through an activation function, and passes the output to the neurons in the next layer.

Each connection between two neurons has an associated weight representing the relationship between these neurons.

Each neuron has an associated activation function, also called a threshold, which decides whether this neuron should be activated or not depending on its incoming inputs.

When the flow of the processing begins from the input layer through the hidden layers to the output layer is called propagation, and when it starts from the output layer through the hidden layers back to the input layer is called back-propagation.

The weights of the connections are also called the network parameters. The number of these parameters determines the size of the network.

Training a network is adjusting these parameters to produce the desired outputs.